Two weeks into the Msc Applied Psychology and it’s been interesting that so many of the famous (or notorious) studies I’ve come across have significant factors I wasn’t aware of. Knowing them gives me a new perspective on the studies and reminds me it’s a good idea to

- read around the studies

- always go back to the actual studies, rather than relying on textbook (or other) summaries of the processes and findings

- question everything

Some examples:

Milgram’s study on obedience (using electric shocks) had predicted less than 3% of the  participants would administer the highest voltage to their ‘student’, in contrast to the 65% that actually did. Further variations of the study (by Milgram himself) showed that obedience in this scenario was influenced significantly by context i.e. outside the realms of the prestigious Yale laboratories overseen by a highly-respected academic.

participants would administer the highest voltage to their ‘student’, in contrast to the 65% that actually did. Further variations of the study (by Milgram himself) showed that obedience in this scenario was influenced significantly by context i.e. outside the realms of the prestigious Yale laboratories overseen by a highly-respected academic.

Despite the fact it was likely his then-girlfriend’s horror that was the most influential factor in ending the Stanford Prison Experiment early, Zimbardo himself said the study was so ethically dubious that it should not be replicated. Nonetheless, it was replicated in a serialised documentary for the BBC (Prison Study, 2002), and the findings were very different from the original study.

The Piliavan ‘Good Samaritan‘ study on ‘the bystander effect’ showed that neither race nor intoxication generally prevented onlookers from helping someone in need (on a subway train).

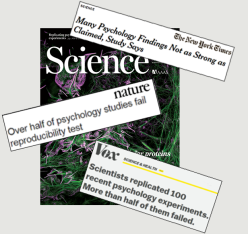

Another aspect that has come up again and again is what is known as the ‘replication crisis’ in psychology. Basically, experiments done to verify the findings of previous ones tend to fail to support previous findings. As science is built on the notion of replicable results, it throws into question the scientific nature of the practice of psychological research.

The problem is compounded by the fact it is very difficult to get published if your hypothesis is either a replication or shown to be disproven (publication bias), so researchers are pressured to do studies that prove their hypothesis or – when they don’t prove – bury them in a cupboard somewhere…or falsify the results. This has serious implications for scientific knowledge because disproving ideas is just as important as proving them – if enough evidence has been gathered to falsify a conclusion, it is in the interest of everyone that this knowledge be shared. But how? If journals won’t publish, how can researchers let others know about their findings? It’s an ongoing issue that some are trying to rectify such as with pre-registration of studies, but it’s clearly something that needs to be taken into account when accepting the findings of any study as generalisable.

But then we have these guys at Harvard who tell us the whole thing’s a misunderstanding and that “the data are consistent with the opposite conclusion, namely, that the reproducibility of psychological science is quite high.” (Gilbert et al, 2015.)

What’s a poor student to do?!